Halo e3 HoloLens Experience (Dali)

Background

When I first joined HoloLens, my team was working on a Minecraft demo for the announce event. This demo is still one of the best AR experiences I’ve ever had, mostly because the team had hardcoded the exact room dimensions and furniture placement into the demo. You could see Creepers under a bench and then dig through the bench to shed more light onto them from above. There was a picturesque castle tower rising from the ground right through a coffee table. The final beat of the demo was detonating TNT on the wall, creating a window into a cave where bats flew out. It really felt like the world was made of little 1cm cubes and you could destroy it and rebuild it as you saw fit.

After the announce event, my team worked on a few prototypes. My friend James made the most compelling one. He wrote some networking code that let users tap on a shared point in the real world to give their virtual Unity worlds a shared frame of reference. Once you were in the same world, you could pin holograms to objects and all users would see them. Naturally he chose a Master Chief helmet and enlisted me to be the second user on the network test. At the time, we were using pre-production units called Gravity C3s. They were wired and bulky. Both the wires and the weight disappeared once I watched James pick up and put on his virtual Master Chief helmet.

Soon after that demo, we got more serious about building a Halo-themed HoloLens experience. We quickly realized that no one knew how many C3s could be in the same room without possible interference. Each C3 was powered by a C3PC - a powerful computer with the right connections to power the C3. If I remember correctly, the cables to connect C3 to C3PCs were optical Thunderbolt cables that were ~30ft long and cost hundreds of dollars each. You had to be very careful not to step on one, especially as you started to get immersed into an experience. Anyway, we gathered up 6 or 8 C3s, C3PCs, cables, and power supplies and headed down below the Microsoft Visitor Center in Building 92 and booted everything up. Thankfully, it all worked.

Enter Dali

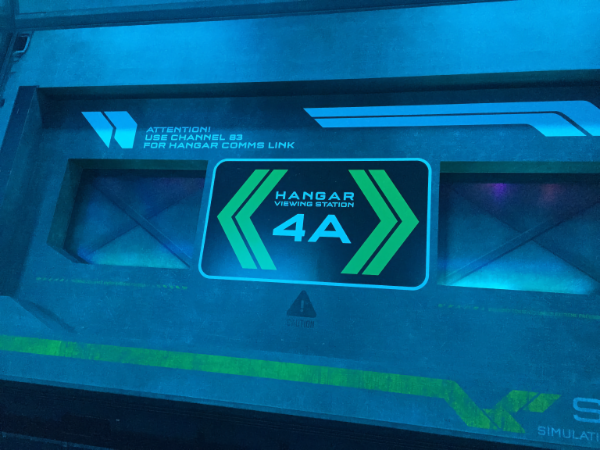

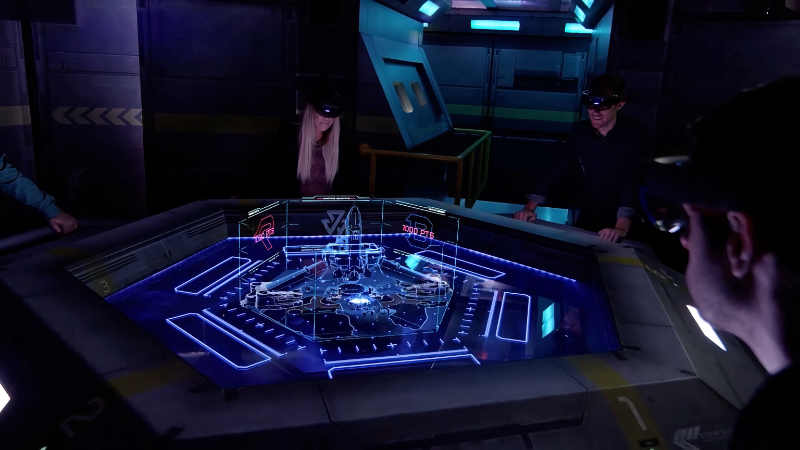

With the network and logistics tests out of the way, we set to work on an experience. The designers came up with a great premise that tied together the goals of 343 Industries and showcased what HoloLens was capable of. We codenamed it Dali. The Minecraft experience before this was codenamed Escher. I guess we liked artists. Anyway, we set our sights on an e3 demo. e3 was a popular video game convention in LA that at this point was open to the public. We decided we wanted to whisk away attendees from the noisy and crowded show floor and straight onto the deck of the UNSC Infinity. From there, they’d meet with UNSC scientists who would fit them into their devices, assign them to a briefing room, and send them to get briefed. The briefing itself was around 5-8 minutes long and would take place on a specially designed table. After the briefing, attendees would take off their HoloLens and be escorted into an area where they could play the Halo 5 Guardians Warzone multiplayer game. Sounds easy enough!

DCC

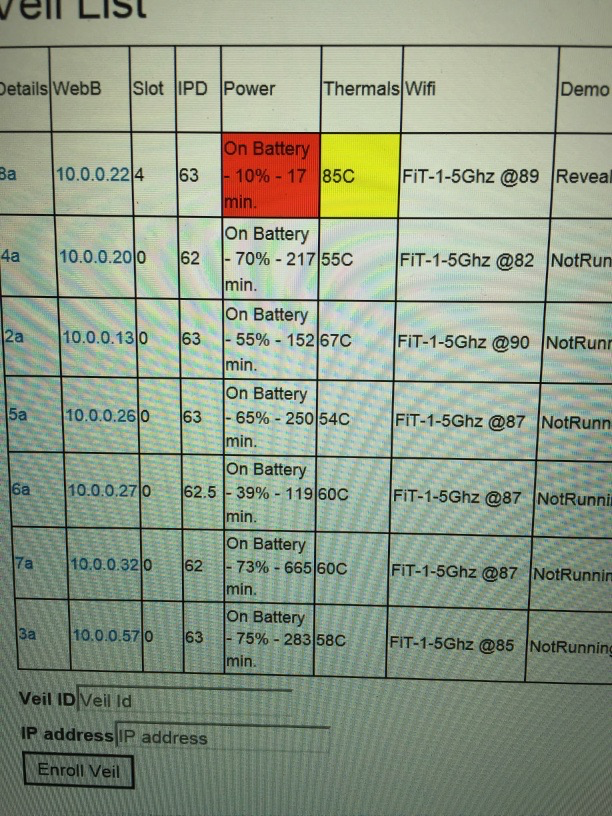

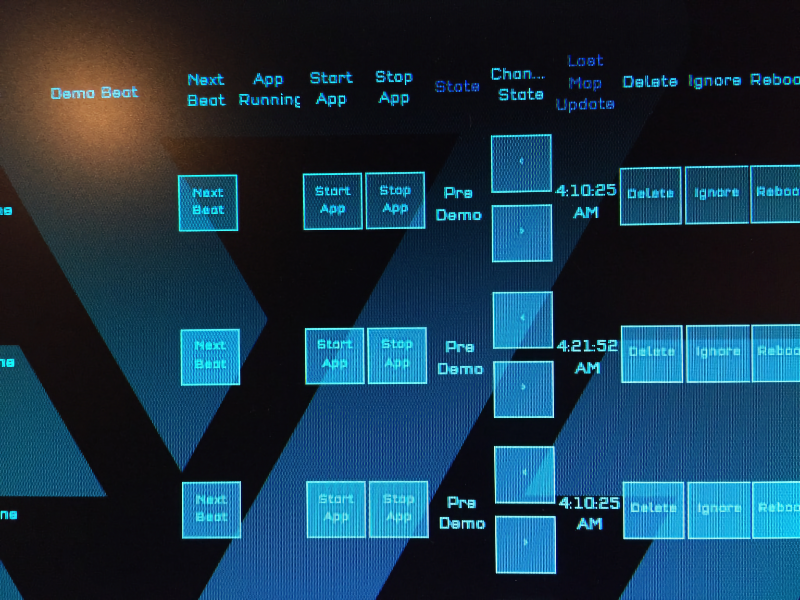

My part of the project was to sync and manage the devices in the experience. Luckily all HoloLenses came with a built-in, OS-level web server that you could ping and get data from via a REST API. I used this and an in-Unity web server to monitor and control the devices before and during our demo. I used node.js and websockets to query and display all the info. At first, I just dropped it into a web page so we could see it all. I called it the Demo Control Center or DCC.

DCC was critical early on as our devices would overheat and burn through battery quite rapidly. Knowing the state of all devices and if they were actually cooling and charging when they weren’t in active use was very important to a smooth set of demos.

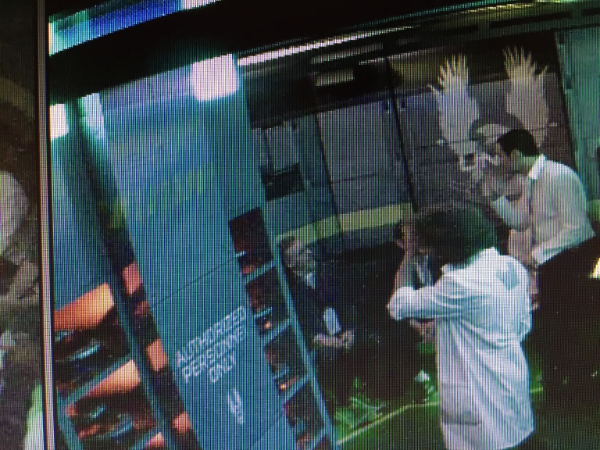

Eventually DCC evolved into the central hub of the demo. The plan was to have in-universe Halo scientists measuring your interpupillary distance (IPD), a measurement important to having a successful HoloLens experience. These scientists would carry a Microsoft Surface running a full-screen webpage served by DCC. The scientists would enter the device ID, the user’s IPD, and which slot at the table they were sending them to so the holograms would orient properly. This front-of-house webpage was themed to look like a futuristic Halo tablet. I don’t have a great picture of that interface unfortunately, but here’s what it looked like when the scientists were fitting Steven Spielberg!

Construction

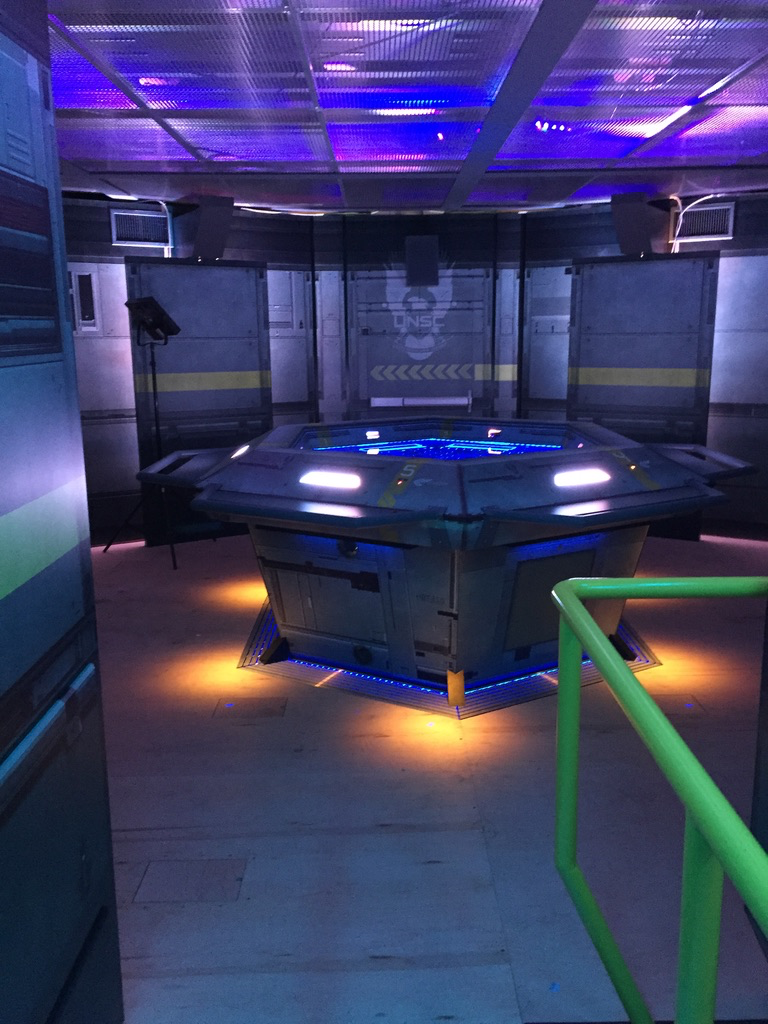

We decided to build an increasingly realistic version of one of the briefing tables and the pre-briefing scientist fitting area all in the same room in the basement of Building 92 called Sparta. I can’t tell you how many hours I’ve spent in this room. I would return here later to work on the Galaxy Explorer project. In Sparta, we set up lightweight walls built of painted R-Max insulation panels, rubber mats, and a holographic comms table as the center piece. This table would be the show piece where the majority of the holograms would be presented. We used etched plexiglass that was edge-lit to give the table a futuristic feel even when looking at it without a HoloLens.

We dialed in our homebrew demo over a few weeks and eventually got buy-in to take it to e3. A company out of Anaheim started fabricating the Xbox booth - a two story behemoth.

Our booth was probably 200 feet long, 2 stories, and was so large it came with its own air conditioners, which later came to haunt us. For our Halo section, we’d use the entire bottom floor, split into a red side and a blue side. Each side had two tables with six slots so that every 10-15 minutes we’d have a 12v12 big team battle going on after the holographic briefing.

We traveled down to LA frequently to ensure the construction was accurate to our needs. I don’t think the contractors were used to such accurate demands. We had digital content that mapped 1:1 to things like our magic window. If the measurements were off at all, the effect was ruined. In the magic window example, users would look at these “windows” and see into an active space loading dock with Halo Pelicans getting ready for a planetary deployment. The asset creation process for this effect was fascinating since the HoloLens was essentially a mobile-grade chipset.

It was amazing to see our DIY set rebuilt by the pros and slowly get more polished week to week.

The lighting and wall decals added so much to the overall feel.

My favorite area was actually the long hallway that connected the calibration area to the briefing rooms. Many elements were metal which furthered the feeling of being on a ship and not on a busy expo show floor. To help HoloLens’ visual tracking system, we dropped in a few stickers here and there for feature points. We used our names as an easter egg.

Here’s the finished 2 story booth. It had meeting rooms and a broadcast area up top. On the first floor you can see a doorway to one side of our Halo area.

BEV Shoot

Since we’d only be able to show a few hundred people total, we wanted a video artifact of what the experience would be like. A few weeks before e3, we went down to Anaheim one more time to the completed booth to work out any last kinks and record some footage. A team at HoloLens invented a lot of smart tech to get a RED camera into the same coordinate space as a HoloLens and render holograms in the correct position and orientation. In other words you could view the HoloLens’ world from a Bird’s-Eye View, hence the rigs were called BEV rigs.

BEV rigs were complicated to run because of all the moving parts. Thankfully we managed to get a few great shots of the experience and put them together in a small trailer (skip to 39s):

We hired extras to stand around the table and act wowed by what they saw. In reality their devices were completely turned off. That’s my arm at the very beginning grabbing the HoloLens and at the very end taking the Comm Chip.

The experience really looked that good because we considered the HoloLens’ small field of view and kept the environment dark to minimize the need of super bright holograms. Here’s a shot of me “acting” with the extras:

We had a fantastic steadicam operator who worked on some of the Alien films. I think he felt right at home on our spaceship

Trials and Tribulations

HoloLens works by building a map of a space using unique feature points. Unfortunately for us, the 2 tables in each wing looked similar enough that sometimes users’ maps would collapse and the device would think it was in the first part of the hallway when it was actually in the second. This would throw off the precise placement of the holograms on the briefing table. We tried recapturing the map many times but ultimately decided to place unique fiducials around the space to ensure the devices knew where they were. Lucky for us, these markers looked futuristic and not totally out of place for a UNSC spaceship.

In addition to the map collapsing issue, we also faced a water leak from the air conditioners on the second level. Luckily we lost fewer than a handful of units to the water, but it was a stressful few days before the show floor opened!

The booth had an insane networking setup. I don’t remember all the details, but we had switches, routers, wifi stations, static IPs, subnets with no internet access, other subnets with access, and since there were hundreds of wifi APs and thousands of phones in the convention center, a booth-wide Faraday cage to maximize our odds of having a flawless network setup. Unfortunately, some settings from one wing did not get copied over to the other wing exactly. We ended up closing one wing for a bit while we debugged and fixed the issue.

The Show Must Go On

We didn’t tell the general public we were bringing HoloLenses to the show. These devices weren’t out yet and we brought 120 of them in the most ruggedize roadie cases I’ve seen. As soon as the show floor opened up, people flocked to the booth. As soon as the first wave was finished with the briefing and the demo, word spread quickly. Each of the subsequent days, our line would fill up within minutes for the entire day, despite our decent throughput.

One of our goals was to pull off this booth with a Disney World level of attention to detail. We wanted the experience to feel great from the moment you got in line. We had even more scientists walking the line outside of the spaceship with IPD measuring devices. They would hand you a lanyard with your IPD written on it. Eventually you’d be ushered into the fitting area and the sliding door would close behind you. This was when you realized you were not in a normal e3 booth.

In the Disney spirit, rather than asking an attendee if they could see holograms and the device was fitted properly (most people would have no idea!), we designed a digital/physical calibration confirmation area. This was a nod to the way the original Halo determined your look control inversion settings by having you look at lights to calibrate, rather than outright asking you. When you looked at the real world calibration panel, you’d see a digital UNSC hologram and a few other registration marks that aligned with the real world panel.

You can’t quite tell from the picture above, but rather than finishing the ceiling or putting up fake baffles or greebles, we just put up grating and let the booth’s natural guts of conduits and other wires show through. It was a neat way to make it look like a real ship by using the real infrastructure of the booth.

Cutting Room Floor

Our booth was already incredibly ambitious, but we loved the idea of sending folks home with something to remember their experience. We ordered hundreds of USB keys with rubber overmolding to make them look like a Cortana chip. Our plan was to embed webcams into the table, take a surreptitious snapshot, composite that snapshot, and then copy it to the USB key. Seemed like a great job for DCC! We embedded six Logitech webcams into the table and wired them to a PC in the table. We also wired in 6 USB ports into the table. Here’s Jeff inspecting the table space to see if there’s room for a PC. I still have one of the keys that we locked the table PC doors with!

Node.js was my tool of choice at this point, so I wrote another node server to run on each table which would control the webcams, do the compositing, and manage copying the file to the Cortana chip. Here’s a calibration shot of testing the webcam.

I was super excited to get this working end to end! Windows doesn’t really enjoy you plugging and unplugging so many USB keys that all are from the same manufacturer, so part of the setup was plugging them in a strict order, starting from slot 1 and going clockwise to the final slot. This ensured my drive letter mapping to slot ID was maintained and you’d walk away with a picture of you and not someone else. Here’s what the final picture would look like:

That picture was taken in our DIY setup in Sparta in Building 92. You can see the R-Max insulation panels in the background. Once the image was taken, the file was copied, and the briefing was over, the holographic instructor would create some glowing geometry which fictionally was your mission briefing metadata. This geometry would float out from the center of the table and towards your Cortana chip where it would resolve into a holographic flag saying “Take Comm Chip”. I wrote extra code to detect the USB key was removed and round-tripped that signal back to DCC and the Unity experience so that the holoraphic flag would hide once you pulled the chip. What you expected to happen, happened and it felt like magic. You can see this whole sequence in the YouTube link above starting at 1:20.

Unfortunately after all the work to support this feature, it was cut. Around this time there were some memes made with execs looking silly in HoloLenses so people were sensitive to having complete control on how HoloLens looked in press material. In the worst cases, our low lighting and webcam quality captures of people not posing could produce pretty bad results. Luckily for us we knew this feature was a stretch so we built the tables to have removable panels which we could replace to cover the webcams.

One other feature that didn’t make the cut was DCC controlled lighting. I had set up these elements to be controlled via a Wemo smart plug, but due to our complex network setup, I was unable to get it working on-site. Luckily, we just positioned a switch behind a panel and had one of the table monitor staff hit it at the right time in the briefing.

We felt pretty good running our experience with our 2 backup devices. However, we wanted to plan for all possibilities. Once attendees were at the table, it was difficult and disruptive to swap out issues with a new device and get them synced back to the demo flow. One of our artists, Andy, came up with a great mitigation: we’d have scientists watch the briefing in one slot and if anyone had an issue, they would swap devices. We called it the “Scientist Swap”. It was great having the failsafe in our back pocket. I think we had to use it once or twice. If at any point everything failed, including our backups, we had a Surface tablet loaded with some videos to keep people entertained briefly before they got to play Halo.

Seeing these manual solutions solve our tech problems taught me to think more broadly and not always try to apply a software or hardware solution to a problem. Sometimes you just need a person.

Other Details

Dali was a career highlight project. But due to the strict hardware, software, staffing, and physical build-out needs, if you didn’t see it, you can now never see it! I like that the experience exists in that space, but I thought it would be a shame if all the behind the scenes context was lost along with the experience. With that in mind, here are a few details I didn’t want to forget.

We fought reliability issues until 4am the night before the show opened. Along with a few other engineers, I went through our setup scripts line by line with Alex Kipman over our shoulders. We used one “golden” device to capture a map of visual markers and then pushed that map to all 119 other devices. I snapped a picture of the time we pushed the map to the last few devices that night:

Dealing with so many devices and so many people meant we had to have strict knowledge of where a device was and what state it was in. During a normal run we’d have 24 HoloLens actively on people’s heads. However, we only needed to accurately synchronize the people at an individual table, so I setup 4 instances of DCC, each would manage 30 devices (4 * 30 = 120). To hedge against reliability issues we always had 2 extra devices ready to go. This meant for a given DCC instance there were 8 devices actively in a demo, 8 more being fitted and calibrated to the next group and the remaining 14 devices charging in a back room we called The Armory. We’d diligently monitor charge status and state for all devices and DCC helped us keep track of everything. We had a radio line to communicate within a wing (between The Armory, Control, Line Manager, and Fitting Area) and a radio line to communicate between red and blue sides to make sure we were releasing groups simultaneously so they could play 12v12 Halo in the room after the briefing. The Control area was basically a closet and we always had at least 2 operators in there. It was also the unofficial break room. We had a strict rule that we only wanted attendees to see in-universe scientists and never the back-of-house staff like me. This meant carefully timing your run and ducking into The Armory or Control during small windows in the flow.

The Control room housed our water supply, a few snacks, spare gaffers tape, and crucially a lone trash can. Additionally we had an internet-connected Surface that we could use to text fans some additional Halo media. I wrote up a Twilio integration for DCC so that the line attendants using the Halo-themed tablets could collect phone numbers as well as IPDs. You can see now how DCC grew from humble beginnings to slowly encompass a lot of booth functionality.

The massive amount of devices we were moving, cleaning, and charging, meant we had to get creative in how to handle these expensive and delicate unreleased wonders. Here’s my friend Michael showing how easy it was.

Here’s some of the swag from my time in our booth. You can see the Cortana chip (loaded with some press material, no custom selfie sadly), the IPD lanyards, some pins for playing Halo, and a bunch of security lanyards and wristbands that got me into the convention center starting at 6 or 7am each day.

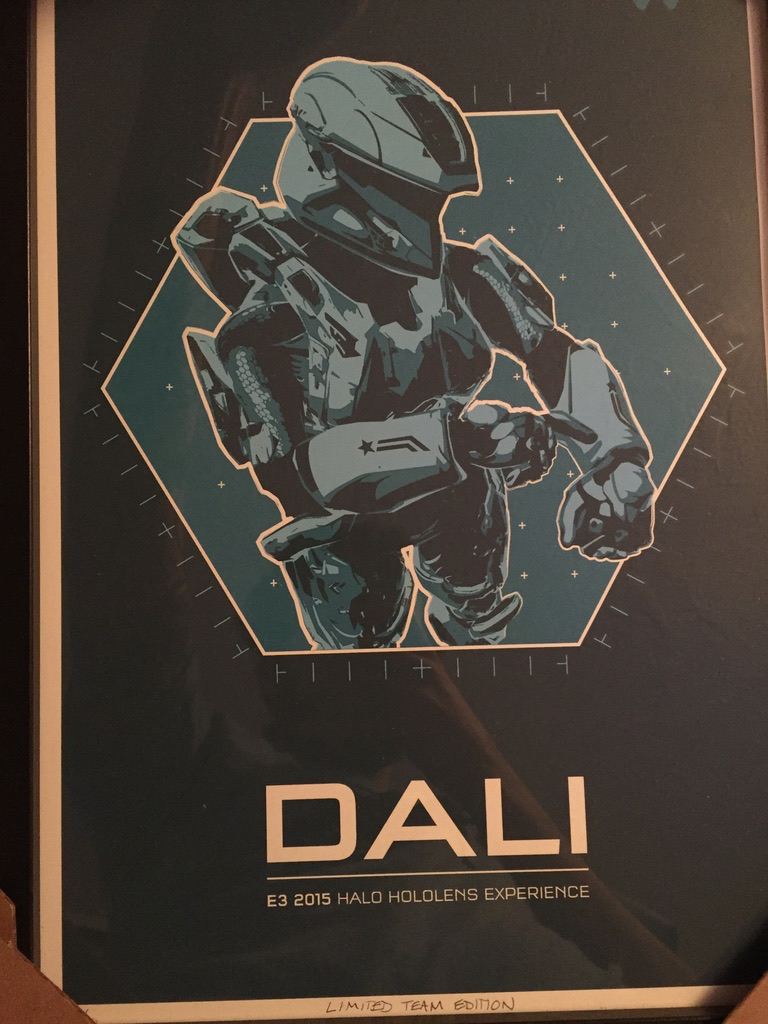

For the team, our Creative Director Shawn got us these awesome Dali posters to commemorate the whole project.

One last shot of where I spent many hours of my life that week in LA.

Running a booth of this size and scope stretched our team and we all grew from it. There are so many on-the-ground lessons that would be impossible to predict. One last minute call that I was grateful for was the addition of security cameras and a monitor in the Control room. Without these, the time spent in the above room would have felt much more claustrophobic. And I would have missed Steve Spielberg, Soulja Boy (on his hoverboard/SouljaBoard), and countless others!

Even my hotel room provided no respite from Halo. From evening code reviews (no desk, so we had to use the bed), to the bathroom mirror being plastered with ads, I couldn’t escape Halo that week.

Our booth ended up winning a ton of awards. It’s not totally clear whether this was for our work or the game, but it was fun to be a part of the whole thing.

A few weeks later, my friend James and I got to relive a little Halo 5 Guardians action when our home club got a special edition jersey.

I’d guess our booth was the most expensive booth in e3 history. The build-out, the devices, the salaried Microsoft engineers, the six-figure Faraday cage, it all added up quickly. My friend Jeff joked that based on the cost per booth attendee, it might have been more effective to give each person an Xbox and a copy of Halo 5. Luckily Marketing had a big budget that year.

Final Thoughts

Dali was one of the highest points in my career. It is astounding to me that almost a decade on, I can remember so many details so vividly. There’s a David Bowie quote I love that really defines this project for me personally, and I think for most of the team that worked on it:

“If you feel safe in the area you’re working in, you’re not working in the right area. Always go a little further into the water than you feel you’re capable of being in. Go a little bit out of your depth. And when you don’t feel that your feet are quite touching the bottom, you’re just about in the right place to do something exciting.”

Our feet weren’t quite touching the bottom, in fact, we might have been in over our heads. But we made something exciting and memorable.